我想在 Azure Databricks 中装载 ADLS Gen 2 存储帐户,但我使用的是无权创建服务主体的 Azure 帐户。所以我正在尝试使用访问密钥挂载容器,但我不断收到错误。

spark.conf.set("fs.azure.account.key.azadfdatalakegen2.dfs.core.windows.net",dbutils.secrets.get(scope="azdatabricks-adlsgen2SA", key="Azdatrbricks-adlsgen2-accesskeys"))

dbutils.fs.mount(

source = "abfss://raw@azadfdatalakegen2.dfs.core.windows.net/",

mount_point = "/mnt/raw_adlsmnt")

我一直收到以下错误消息

> --------------------------------------------------------------------------- ExecutionError Traceback (most recent call

> last) <command-555436758533424> in <module>

> 1 spark.conf.set("fs.azure.account.key.azadfdatalakegen2.dfs.core.windows.net",dbutils.secrets.get(scope="azdatabricks-adlsgen2SA",

> key="Azdatrbricks-adlsgen2-accesskeys"))

> ----> 2 dbutils.fs.mount(

> 3 source = "abfss://raw@azadfdatalakegen2.dfs.core.windows.net/",

> 4 mount_point = "/mnt/raw_adlsmnt")

>

> /databricks/python_shell/dbruntime/dbutils.py in

> f_with_exception_handling(*args, **kwargs)

> 387 exc.__context__ = None

> 388 exc.__cause__ = None

> --> 389 raise exc

> 390

> 391 return f_with_exception_handling

>

> ExecutionError: An error occurred while calling o548.mount. :

> java.lang.NullPointerException: authEndpoint at

> shaded.databricks.v20180920_b33d810.com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

> at

> shaded.databricks.v20180920_b33d810.org.apache.hadoop.fs.azurebfs.oauth2.AzureADAuthenticator.getTokenUsingClientCreds(AzureADAuthenticator.java:84)

> at

> com.databricks.backend.daemon.dbutils.DBUtilsCore.verifyAzureOAuth(DBUtilsCore.scala:803)

> at

> com.databricks.backend.daemon.dbutils.DBUtilsCore.verifyAzureFileSystem(DBUtilsCore.scala:814)

> at

> com.databricks.backend.daemon.dbutils.DBUtilsCore.createOrUpdateMount(DBUtilsCore.scala:734)

> at

> com.databricks.backend.daemon.dbutils.DBUtilsCore.mount(DBUtilsCore.scala:776)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498) at

> py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244) at

> py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:380) at

> py4j.Gateway.invoke(Gateway.java:295) at

> py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

> at py4j.commands.CallCommand.execute(CallCommand.java:79) at

> py4j.GatewayConnection.run(GatewayConnection.java:251) at

> java.lang.Thread.run(Thread.java:748)

我们有没有办法使用访问密钥安装adls第2代容器?

Databricks不再建议将外部数据位置挂载到Databricks文件系统;请参见在Azure Databricks上安装云对象存储。

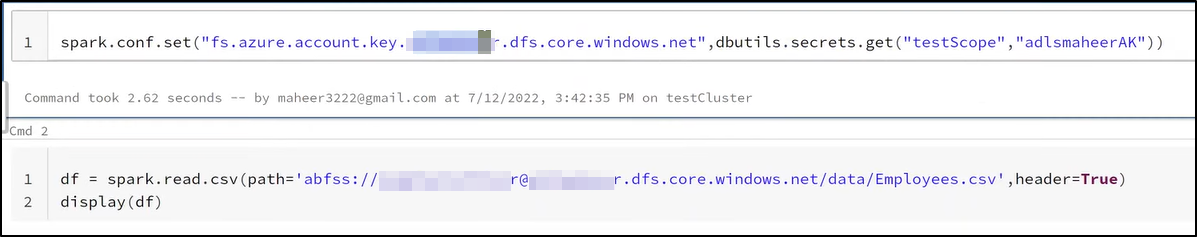

最好的方法或推荐的方法是在Spark上设置配置以访问ADLS Gen2,然后使用URL访问存储文件。

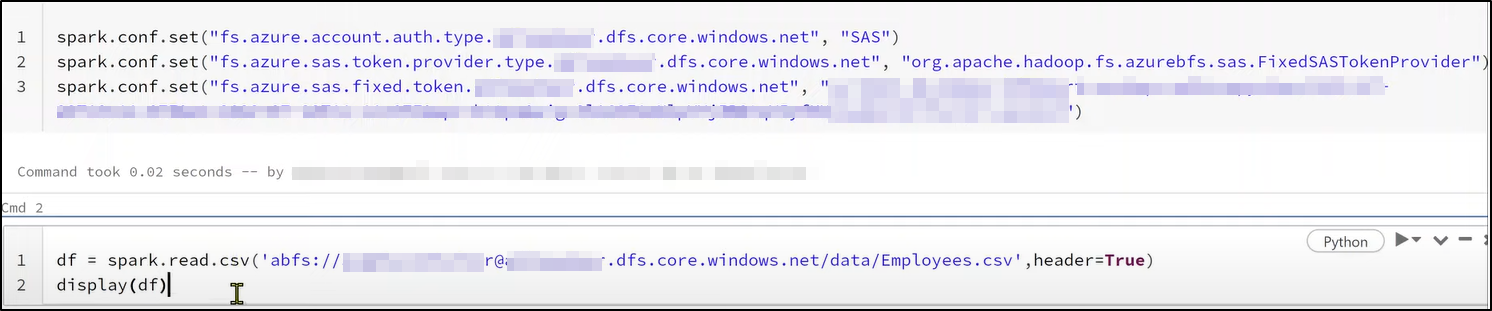

请注意,在存储级别使用SAS令牌才能正常工作。

如果要使用Azure数据库加载存储帐户。遵循以下语法:

dbutils.fs.mount(

source = "wasbs://pool@vamblob.blob.core.windows.net/",

mount_point = "/mnt/io234",

extra_configs = {"fs.azure.account.key.vamblob.blob.core.windows.net":dbutils.secrets.get(scope = "demo_secret", key = "demo123")})

输出:

替代方法

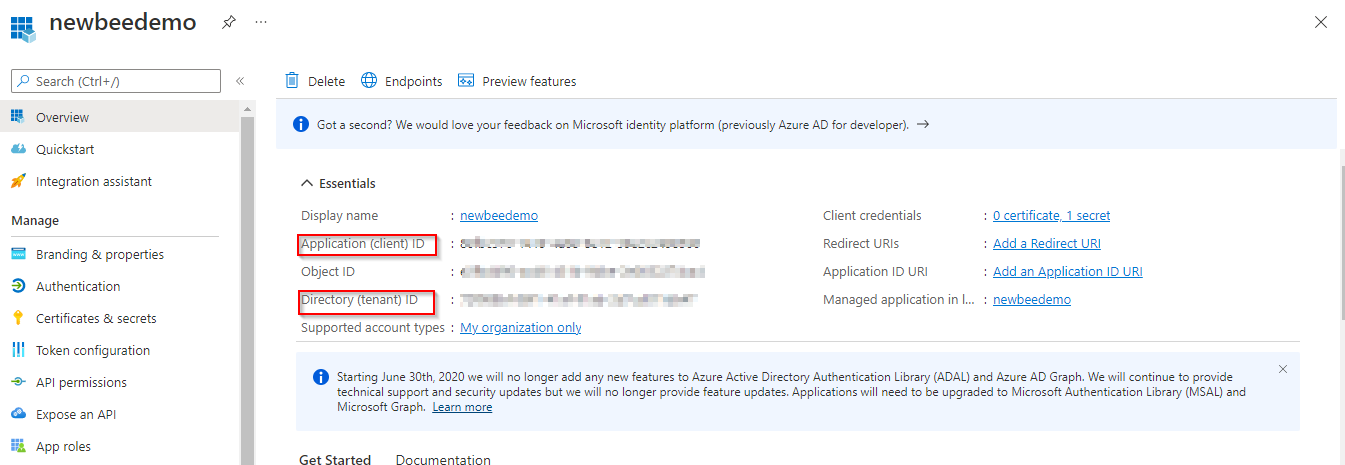

首先在活动目录中创建应用程序注册表,您将获得 client.id,tenent id。

客户端秘密在AAD中创建

请遵循以下语法创建挂载存储:

configs = {"fs.azure.account.auth.type": "OAuth",

"fs.azure.account.oauth.provider.type": "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider",

"fs.azure.account.oauth2.client.id": "xxxxxxxxx",

"fs.azure.account.oauth2.client.secret": "xxxxxxxxx",

"fs.azure.account.oauth2.client.endpoint": "https://login.microsoftonline.com/xxxxxxxxx/oauth2/v2.0/token",

"fs.azure.createRemoteFileSystemDuringInitialization": "true"}

dbutils.fs.mount(

source = "abfss://<container_name>@<storage_account_name>.dfs.core.windows.net/<folder_name>",

mount_point = "/mnt/<folder_name>",

extra_configs = configs)

有关更多信息,请参阅Ron L‘Esteve的这篇文章